Posts

feeds justification

I realized I've left out a major part from my sequence of previous feed-related posts: a justification for why we should bother with a separate feed_sync_id.

So let's give it a shot!

The fundamental problem is:

AUTO_INCREMENTids are assigned in insertion order, but become visible to other threads in commit order.

To see how this causes a problem, consider the interactions and visibilities between three transactions to the same database:

t0: TRX0: BEGIN; INSERT INTO kv (ns, k, v) VALUES ("-", "k0", "v0"); COMMIT;

t1: TRX1: BEGIN; INSERT INTO kv (ns, k, v) VALUES ("-", "k1", "v1");

t2: TRX2: BEGIN; INSERT INTO kv (ns, k, v) VALUES ("-", "k2", "v2");

t3: TRX0: SELECT MAX(id) FROM kv;

t4: TRX2: COMMIT;

t5: TRX0: SELECT MAX(id) FROM kv;

t6: TRX1: COMMIT;

t7: TRX0: SELECT MAX(id) FROM kv;

Here, we have two transactions that both insert a new kv record.

The database has to assign an id value to each of those records, because we might be creating other associations to those records in our application code.

But other threads -- TRX0 in this case -- shouldn't be able to see those records until we COMMIT, and so indeed the SELECT at t=t3 might return 1.

Next, we commit TRX2.

This was the third insert the database saw, so we expect MAX(id) to be 3, and indeed, it is, as verified at t5.

Finally, we commit TRX1.

Consumers will now be able to see an id=2 record -- hooray!

Except: if we applied a feed-style consumption based on id instead of feed_sync_id, we have introduced the possibility of skipping records.

To see how this happens, consider a feed-style fetch w/ id at t=t5 above.

We will run a query like

SELECT *

FROM kv

WHERE id > :cursor

ORDER BY id

LIMIT :limit

and fetch

KV(id=1, ns=-, k=k0, v=v0)

KV(id=3, ns=-, k=k2, v=v2)

Note that there is no KV(id=2) record committed yet, so this response is correct!

The problem comes from subsequent fetches: In particular, we will record 3 as the cursor to use for the next fetch.

This will prevent the subsequent fetch (with cursor:=3) from ever observing the KV(id=2) record!

This is pretty bad, and that's why we pay the penalty of storing a separately assigned feed_sync_id column.

schema

After all the updates, I figure it'd be helpful to give a reference for the current schema we've built up over all the blog posts mentioned above:

CREATE TABLE `kv` (

`id` bigint(22) NOT NULL AUTO_INCREMENT,

`created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`updated_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`feed_sync_id` bigint(22) DEFAULT NULL,

`shard` int(11) DEFAULT '0',

`ns` varchar(255) COLLATE utf8_bin NOT NULL DEFAULT '',

`k` varchar(255) COLLATE utf8_bin NOT NULL,

`v` longblob NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `u_ns_k` (`ns`,`k`),

UNIQUE KEY `u_fsi` (`feed_sync_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

macos log command

My work laptop has been randomly shutting itself off. I came across this stackoverflow post, it said to run

log show --predicate 'eventMessage contains "Previous shutdown cause"' --last 24h

which, for me, returns

risksys ➜ log show --predicate 'eventMessage contains "Previous shutdown cause"' --last 24h

Filtering the log data using "composedMessage CONTAINS "Previous shutdown cause""

Skipping info and debug messages, pass --info and/or --debug to include.

Timestamp Thread Type Activity PID TTL

2019-05-23 11:29:01.874486-0700 0xaf Default 0x0 0 0 kernel: (AppleSMC) Previous shutdown cause: -128

2019-05-23 11:44:49.786722-0700 0xaf Default 0x0 0 0 kernel: (AppleSMC) Previous shutdown cause: -128

--------------------------------------------------------------------------------------------------------------------

Log - Default: 2, Info: 0, Debug: 0, Error: 0, Fault: 0

Activity - Create: 0, Transition: 0, Actions: 0

This, combined with these MacOS Shutdown Causes paints a pretty bleak picture: I've got RAM issues :-(

absolutely minimal OLTP to OLAP pipeline

Suppose we have some data in a production OLTP database, and we need to send it to some OLAP database. This post describes one of the simplest approaches, and how to make it productional enough to rely on.

For every table t, we need to:

- introduce a new field,

updated_at - introduce a new index on that field, so we can get the records that changed after a certain

updated_at.

For example,

ALTER TABLE t

ADD COLUMN updated_at TIMESTAMP(3)

NOT NULL

DEFAULT CURRENT_TIMESTAMP(3)

ON UPDATE CURRENT_TIMESTAMP(3),

ADD INDEX idx__updated_at (updated_at);

We add the ON UPDATE CURRENT_TIMESTAMP so that we don't need to deal with any application code to give us what we need.

We add the idx__updated_at to support a new query pattern:

SELECT *

FROM t

WHERE updated_at >= :lastCutoff

ORDER BY updated_at

LIMIT :limit

Here, we're basically going to fetch the records that have been updated since the last time we ran the query, up to a given limit. The limit is an important mechanism, because it lets us paginate over the changed records. Bounding the amount of work we do for a single fetch lets us bound the expected execution time, so we can find out more aggressively when things are broken.

This consumes a single "page" of data. We will need to repeatedly execute this query to emit new records as they are updated.

non-strict inequality

The astute reader might have noticed we used >= in the lastCutoff portion of the query; this implies that we might see duplicates.

But it is also important, because in a given fetch, we might not have consumed all records with that timestamp.

For example, consider

rec(id=1, updated_at=1)

rec(id=2, updated_at=2)

rec(id=3, updated_at=3)

rec(id=4, updated_at=3)

rec(id=5, updated_at=4)

consumed from the start with lastCutoff=0 and limit=3.

The first fetch returns

rec(id=1, updated_at=1)

rec(id=2, updated_at=2)

rec(id=3, updated_at=3)

So if the next fetch query were to be

SELECT *

FROM t

WHERE updated_at > 3

ORDER BY updated_at

LIMIT 3

we would skip the id=4 record.

wedge possibility

The non-strict inequality induces a different problem, though. Consider

rec(id=1, updated_at=1)

rec(id=2, updated_at=1)

rec(id=3, updated_at=1)

rec(id=4, updated_at=1)

rec(id=5, updated_at=1)

consumed with lastCutoff=0 and limit=3.

The first fetch again returns the first three records, but the next query will be

SELECT *

FROM t

WHERE updated_at >= 1

ORDER BY updated_at

LIMIT 3

which will again return the first three records and therefore fail to make progress -- it has become wedged.

There are a variety of resolutions to this process, including:

don't actually resolve it, just

a. pick a relatively large

limitb. pick a relatively granular

updated_at.c. just rely on the monitoring provisions below to update these as needed.

use an adaptive limit that temporarily increases

limitif it detects a lack of progressuse a more feed-like mechanism instead

fixing duplicates

Whether or not we observe duplicates from the precise fetch mechanism, we can also observe duplicates from a sequence of updates like

rec(id=1, updated_at=1, value="t")

rec(id=1, updated_at=2, value="u")

rec(id=1, updated_at=3, value="v")

If observing the intermediate results is inconsequential, we can easily deduplicate these by picking the record with the greatest updated_at value.

missing intermediate updates / only guarantees LAST update

It should be pretty clear that this mechanism does not necessarily deliver all updates.

If my first fetch runs at t>3 against this history of rec values:

rec(id=1, updated_at=1, value="t")

rec(id=1, updated_at=2, value="u")

rec(id=1, updated_at=3, value="v")

the database will only actually have a single record:

rec(id=1, updated_at=3, value="v")

and therefore, we will never emit the value=t or value=u records!

In a lot of systems, this can be ok! But if it's not, we must consider either a different mechanism or a different data model. As an example, a different data model could store

rec_update(id=101, rec_id=1, updated_at=1, value="t")

rec_update(id=102, rec_id=1, updated_at=2, value="u")

rec_update(id=103, rec_id=1, updated_at=3, value="v")

monitoring

This being an online system, we should define some appropriate correctness / monitoring criteria.

One possible correctness property of this approach is

the most recently processed

updated_atis sufficiently recent

but this might not be appropriate if the table being replicated can have long gaps between updates. A slightly better variant might be

the most recent successfully poll operation occurred sufficiently recently

This is good to ensure that we're making progress and that our process hasn't broken.

But even if our polling process is working, it might be that the limit or polling frequency are simply not high enough to keep up!

To account for that, we can instead check that

the number of records after

lastCutoffis sufficiently small

This works ok, except 1) we need cron-style monitoring for this process as well, and 2) if we are very behind, such a count might be prohibitively expensive. We can lessen the overhead of (2) by changing to:

the difference between the max

updated_atin the table & thelastCutoffis sufficiently small

Here, the index on updated_at makes the query effectively constant-time, which is great!

But it does sacrifice the knowledge of how many records we're behind by, which is often a better indication of how much work we need to do to catch up.

One intermediate approach is executing a subquery with a LIMIT clause, so we can obtain precise backlog figures when it is cheap enough to do so, and otherwise tops out at that limit.

I've seen those limits set between 1000 and 100000 in various production systems I've worked with, and they have been pretty effective.

comparison with feeds

This mechanism bears a lot of similarity to MySQL feeds, but with a few major differences:

- this polling approach is much simpler than feeds because it doesn't need the

feed_sync_idassignment process or any API - presence of the API layer in the feed approach make it possible to perform transformations on the exposed records, while there is no such capability in this approach

- the feed approach avoids the "wedge possibility" problem since it is impossible to have multiple records with the same

feed_sync_id

smart arguments

It's easy to love the terseness one can get out of a command-line tool with a "pass by order" convention. For example, maybe I run something like

deploy myapp sea2 production

to deploy an application called myapp to the second production datacenter near Seattle.

This works great, except now I need to remember the order of arguments is: deploy [app] [datacenter] [environment].

The typical solve for this problem is introducing named arguments, so you'd end up with

deploy --app myapp --datacenter sea2 --environment production

This no longer depends on the order, because we're explicit about which value we're passing for each argument. The command itself is a LOT longer though!

In [41]: len("deploy myapp sea2 production")

Out[41]: 28

In [42]: len("deploy --app myapp --datacenter sea2 --environment production")

Out[42]: 61

We can improve this a bit with short named arguments, like

deploy -a myapp -d sea2 -e production

In [43]: len("deploy -a myapp -d sea2 -e production")

Out[43]: 37

but now we still need to remember that the arguments are a, d, and e and which values they'll correspond to.

Indeed, we might even have some code that checks that the value of environment is either "staging" or "production"!

So what if we turned that on its head? We can use our error checking code to automagically figure out which argument value corresponds to which argument!

formalism

- you know you need N args,

a0...aN.- In the above example,

a0=myapp,a1=sea2,a2=production.

- In the above example,

- each arg value

a_khas a qualifier predicateq_kthat returns true for a valuevifvis valid for arga_k - last

vwins (e.g.http://a.com+http://b.comprefers the second)

example 1

Suppose we want a markdown link generator, which takes two args:

- a url (like

https://traviscj.com/blog)- qualifier predicate: "starts with http"

- qualifier predicate: "contains ://"

- a slug (like

myblog)- qualifier predicate:

!url

- qualifier predicate:

example 2

Another nice example is if our arguments are a combination of app, datacenter, and environment, with values like these:

- app:

a/b/c/ ... - datacenter:

sea1/sea2/sea3/ ... /ord1/ord2/ord3/ ... - environment:

staging/production

We can define simple predicates for the latter two:

is_datacenter(a):=a.startswith("sea") or a.startswith("ord")is_environment(a):=a in ["staging", "production"]

The app arg is a bit trickier, though, because the engineers might name things however they want!

The ideal case would be some internal app that maintains a list of valid applications, but failing that, we could also declare something like

# is_app(a) := not is_datacenter(a) and not is_environment(a)

def is_app(a):

return not is_datacenter(a) and not is_environment(a)

example 3

md link generator w/ alt text

like ex1, but:

a link text

- qual predicate: slug arg exists & string length greater than slug arg length

- qual predicate: any whitespace

test impl in

Scratch_kFFXI4

ambiguity resolutions

One trouble we might have is a given argument value qualifying for multiple arguments. When that happens, we can (roughly in order of increasing danger):

- blow up

- ask for resolution (ie prompt)

- assume a default value

- guess

- loop over possibilities

other extensions

- disqualifier predicates

feed sequences

In the mysql feeds post, I mentioned that the publisher could do

SELECT MAX(feed_sync_id)+1

FROM kv

to find the next feed_sync_id during the publishing process, but

this is actually a really bad idea.

(And I knew it at the time, so forgive me for selling lies...)

Republishing

Before we jump into the problematic scenario, I'd like to motivate it with a tiny bit of background.

The republish operation is extremely useful when consumers need to receive updates. It is also extremely simple! A query like

UPDATE kv

SET v = "jim"

WHERE k = "fred";

is just amended to include feed_sync_id = null, as in:

UPDATE kv

SET v = "jim"

, feed_sync_id = null

WHERE k = "fred";

By updating feed_sync_id in the same query (or at least transaction) as the other updates, we can lean on the database's atomicity guarantee to ensure that either both fields get updated successfully or a rollback occurs.

In the latter case, the application block further processing until an update occurs.

From there, we can guarantee that the feed mechanism will deliver the final update with at-least-once semantics.

at most once?

Consider the following scenario:

t=0: consumer: initialize with cursor=""

t=1: publisher: KV(k="fred", v="bob", feed_sync_id=None, version=1)

t=2: publisher: KV(k="fred", v="bob", feed_sync_id=1, version=2)

t=3: consumer: consumes KV(fsi=1, version=2); updates cursor=1

t=4: publisher: KV(k="fred", v="jim", feed_sync_id=None, version=3)

t=5: publisher: KV(k="fred", v="jim", feed_sync_id=1, version=4)

t=6: consumer: fetches KV w/ fsi > 1; nothing to do!

To summarize, we've republished the last record (by feed_sync_id) after the consumer has already consumed it, and thereby missed the subsequent updates!

That is, the consumer does not see version=4 of KV(k="fred")!

sequence-based updates

To rule this out, we need to ensure that the feed_sync_id monotonically increases, even with arbitrarily republished records.

Introduce a sequences table, like:

CREATE TABLE `sequences` (

id BIGINT(20) NOT NULL AUTO_INCREMENT,

name VARCHAR(255) NOT NULL,

value BIGINT(20) NOT NULL,

version BIGINT(20) NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `u_name` (`name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

where value stores the maximal feed sync id assigned, for a sequence identified by name.

The inclusion of the name field allows this table to support multiple feed publishers from a single database instance.

The version column can be used for optimistic locking, or simply omitted.

The publish operation will now need to

find the next

kvrecord to publishnext_id = sql(""" SELECT id FROM kv WHERE feed_sync_id IS NULL LIMIT 1 """)query

sequences, likeseq = sql(""" SELECT * FROM sequences WHERE sequences.name = :sn """, sn=sequence_name) next_fsi = seq.value + 1use

next_fsias thefeed_sync_idfor the nextkvrecord we publishsql(""" UPDATE kv SET feed_sync_id = :fsi WHERE id = :id """, fsi=next_fsi, id=next_id)finally, advance the

sequences.value(assuming we actually assignednext_fsi):sql(""" UPDATE sequences SET value = :mf WHERE name = :sn """, mf=next_fsi, sn=name)

Critically, (1)-(4) should occur within a single transaction; otherwise a feed fetch for a given cursor might return different records!

pattern: extract mutating variables to State class

I've come to really like the pattern of extracting local or instance variables into their own State class, and writing an algorithm in terms of that State.

A really trivial (if contrived) example of this would be a "sum cubes" algorithm; something like this:

public class CubeSummer {

private final PrintStream printStream;

private long i;

private long sum;

public CubeSummer() {

this.printStream = System.out;

reset();

}

public long sumCubes(long limit) {

for (; i<limit; i++) {

sum += i * i * i;

display();

}

return sum;

}

public void reset() {

i = 0;

sum = 0;

}

public void display() {

printStream.print("i = " + i + ";");

printStream.println(" sum = " + sum);

}

}

What's happened here is we've essentially promoted some variables that should arguably be local variables into instance variables, to avoid needing to pass them around explicitly.

But in the process, we've also mixed long-lived state with short-lived state, broken thread safety, and forced clients of this class into a particular calling convention (either instantiate an instance per sumCubes call or carefully respect thread safety and call #reset().)

Put a different way:

The lifecycle of i/sum are not very clear -- are they scoped to a particular sumCubes operation? Or across many invocations? or something else entirely?

Is this class thread-safe?

Who is responsible for resetting the per-request state -- the sumCubes method, or its caller?

If it is the subCubes method, is that reset behavior guaranteed even when something terrible (an exception) happens inside the method?

To make it more clear, we can pull out the i & sum variables into a new state class like

public static class CubeSumState {

public long i = 0;

public long sum = 0;

}

With those definitions in mind, our implementation becomes:

public long sumCubes(long limit) {

CubeSumState state = new CubeSumState();

for (; state.i < limit; state.i++) {

state.sum += state.i * state.i * state.i;

display(state);

}

return state.sum;

}

Now the lifecycle of state is now very clear -- it's exactly one invocation of sumCubes!

By removing the "per-request" state from the CubeSummer class, we've also made it thread-safe and safe to use as a singleton.

We haven't bothered with this here, but if it was useful, we could incorporate validation logic into the setters of our State object to ensure that we never enter an invalid state.

Also note that we're not strictly bound to our custom debugging output anymore: we can replace the display() with something like

public class CubeSummer {

// ...

private final Gson gson;

public void display(CubeSumState state) {

printStream.println(gson.toJson(state));

}

}

This genericism comes with an immense benefit:

it is precisely a structured log of all the relevant state, which can be used for for subsequent (re)interpretation or as a checkpoint for later resumption.

It also automatically "adapts" if we introduce new fields into CubeSumState!

This approach also has similar benefits for debugging, since all state and mutations are focused within a single object.

This example is, of course, a bit artificial: a pair of long wouldn't normally require a custom object to represent.

But the distinction between state that is

- long-lived / reused across many invocations

- short-lived / used for a single invocation

is an extremely useful one in practice.

edit 2019-01-09: thanks Wouter for recommending some great improvements here wrt naming + correctness; nobody who has worked with him would be surprised at his rigor :-)

unreasonable wish -- an sql interface to websites

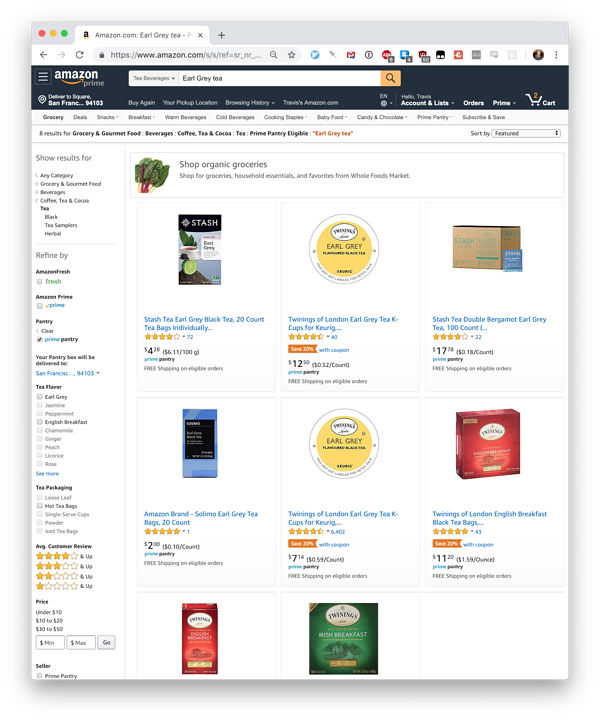

I was searching Amazon Prime for some Earl Grey tea for my wife. I got these results

This was just a basic search for earl grey tea in the Prime Pantry store.

I would love to

SELECT *

FROM products p

WHERE p.prime_pantry = 1

AND p.keywords = 'earl grey tea'

AND p.packaging = 'bulk'

I get that this'll never happen, but man... it'd be nice.

more light, better pictures

We were trying to take some Christmas pictures but feeling a bit unsatisfied with the way the pictures were coming out:

I wanted some flash or something, but the batteries on all the "better" cameras were already dead, so I went looking for an alternative. We tried to get the overhead light turned on, but couldn't find the switch for that.

The next-closest source of light was a sheet music lamp on my mom's piano! Borrowing that for the cause, I was able to get this one instead:

Pretty happy with that!

watching progress in mysql

Pretty frequently at work, I end up polling a database with some command like

SELECT MAX(id) FROM my_tbl;

SELECT MAX(id) FROM my_tbl;

SELECT MAX(id) FROM my_tbl;

SELECT MAX(id) FROM my_tbl;

-- .... ad nauseam ....

I've eventually noticed a few patterns I use pretty consistently:

estimate ETA by including NOW()/UNIX_TIMESTAMP()

Generally, the point of hovering over the table is to get an estimate of when it will finish/catch up/whatever. For that, you generally want to include a timestamp in the query output, so when you come back a while later, you can know exactly how much time has elapsed. I might transform the above query into

SELECT

NOW()

, UNIX_TIMESTAMP()

, MAX(feed_sync_id)

FROM kv;

Here, I include NOW() for a human-readable time, UNIX_TIMESTAMP() to make it easier to estimate the duration between a pair of query results by avoiding the need to add in hr*60*60 + min*60, and of course the actual value I'm interested in.

roll the starting point into subsequent queries

If I run

mysql root@localhost:traviscj_development> SELECT NOW(), UNIX_TIMESTAMP(), MAX(feed_sync_id) FROM kv;

+---------------------+------------------+-------------------+

| NOW() | UNIX_TIMESTAMP() | MAX(feed_sync_id) |

+---------------------+------------------+-------------------+

| 2019-01-03 20:03:18 | 1546545798 | 15 |

+---------------------+------------------+-------------------+

1 row in set

Time: 0.048s

and I know I need to get through a million feed_sync_ids, I might further add a couple of clauses like

1e6 - MAX(feed_sync_id) AS remaining

UNIX_TIMESTAMP() - 1546545798 AS elapsed

which can be combined to estimate an ETA if useful.

structured lookback in subsequent queries

We can even take it one step further:

CREATE TEMPORARY TABLE poll_metrics (

metric VARCHAR(255) NOT NULL DEFAULT 'default',

ts TIMESTAMP(3) NOT NULL DEFAULT CURRENT_TIMESTAMP(3),

value DOUBLE NOT NULL DEFAULT '0'

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

This has some nice properties!

- it's a temporary table, so it doesn't interfere with anything else or have very much risk of becoming HUGE or anything like that -- it's not even visible to other clients of that database!

- it will automatically populate any of the fields if we know we're only gathering a single metric...

- but it allows us to collect multiple metrics if we want to!

We can easily populate this from querying a different table, as simply as

INSERT INTO poll_metrics (value)

SELECT 13*(SELECT MAX(feed_sync_id) FROM kv);

or as we want:

INSERT INTO poll_metrics (metric, ts, value)

SELECT

"kv_prog"

, NOW(3)

, (SELECT MAX(feed_sync_id) FROM kv);

Together, those two queries would result in:

mysql root@localhost:traviscj_development> SELECT * FROM poll_metrics;

+---------+----------------------------+-------+

| metric | ts | value |

+---------+----------------------------+-------+

| default | 2019-01-03 20:30:42.693000 | 195.0 |

| kv_prog | 2019-01-03 20:30:59.090000 | 15.0 |

+---------+----------------------------+-------+

2 rows in set

Time: 0.005s

stored in a table for other querying.

personal finance resources

I wanted to say a tiny bit about personal finance stuff, as I feel like I have gotten a fairly good feel for it.

Some great resources to start getting up to speed include:

- r/personalfinance, especially

index investments

Ideally you want to own a lot of different companies, so you've diversified your asset ownership. One of the easiest ways to do this is through index investing, where the index "tracks" a broad swath of the market, like "All S&P 500 Companies" or "All stock market companies".

My preferred way to do this these days is via the Vanguard funds through E*Trade, like:

These are a great funds because of their really low expense ratios (0.04%, 0.04%, 0.05%, respectively) and lack of brokerage fees (so you don't pay E*Trade to put them on!).

E*Trade does have a bit of a learning curve though, even when you're just trading index ETFs: you have to pick the asset allocations by buying and selling individual stocks, you have to do stuff (like buy more stocks!) with your dividends, you need to watch out that you're not buying ETFs with commissions, and soforth.

You also need to be making big enough deposits to buy entire shares of the ETFs you want to buy; a single share of VOO, for example, will set you back about $230 as I write this!

All of that is a bit simpler with a "robo-advisor" like Betterment. (Forgive me, this is a referral link! This one is a non-referral link if you'd prefer that.) Here, you simply pick the ratio of stocks vs bonds and a risk tolerance and then Betterment balances your portfolio toward that target, reinvesting dividends in more shares, and efficiently rebalancing and even harvesting tax losses!

side investments

Once you've got a bit of a grasp on the "basics", you can also branch out a bit into some more exotic investments.

LendingClub is one interesting example. They allow borrowers to apply for loans and investors to those loans, where a single loan is funded by potentially many investors.

And of course, Coinbase remains a solid bet for your cryptocurrency investment/gambling needs.

final thoughts

My parting advice is to seek out a VERY strong understanding of the tax implications of your trading activity before doing it for real. I did a lot of paper trading that neglected the impact of taxes on the overall return of the strategy or trade; you really need to understand those impacts to make the correct investment decisions for you!

I should also disclose that I hold some long positions in everything I've mentioned here, and that you should consult a paid professional before taking any of the above advice!

subscribe via RSS